| dc.contributor.author | Moran Ledesma, Marco Aurelio | |

| dc.contributor.author | Schneider, Oliver | |

| dc.contributor.author | Hancock, Mark | |

| dc.date.accessioned | 2021-12-21 15:31:42 (GMT) | |

| dc.date.available | 2021-12-21 15:31:42 (GMT) | |

| dc.date.issued | 2021-11-05 | |

| dc.identifier.uri | https://doi.org/10.1145/3486954 | |

| dc.identifier.uri | http://hdl.handle.net/10012/17792 | |

| dc.description | ©2021 Association for Computing Machinery.

This is the author’s version of the work. It is posted here for your personal use. Not for redistribution. The definitive Version

of Record was published in Proceedings of the ACM on Human-Computer Interaction, https://doi.org/10.1145/3486954. | en |

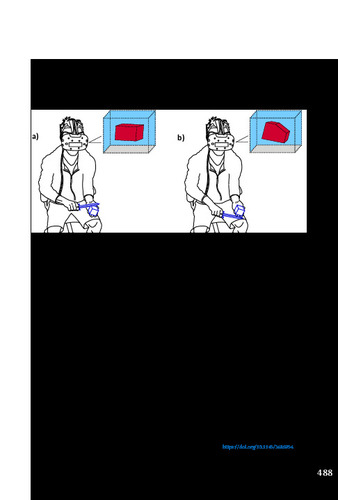

| dc.description.abstract | When interacting with virtual reality (VR) applications like CAD and open-world games, people may want to use gestures as a means of leveraging their knowledge from the physical world. However, people may prefer physical props over handheld controllers to input gestures in VR. We present an elicitation study where 21 participants chose from 95 props to perform manipulative gestures for 20 CAD-like and open-world game-like referents. When analyzing this data, we found existing methods for elicitation studies were insufficient to describe gestures with props, or to measure agreement with prop selection (i.e., agreement between sets of items). We proceeded by describing gestures as context-free grammars, capturing how different props were used in similar roles in a given gesture. We present gesture and prop agreement scores using a generalized agreement score that we developed to compare multiple selections rather than a single selection. We found that props were selected based on their resemblance to virtual objects and the actions they afforded; that gesture and prop agreement depended on the referent, with some referents leading to similar gesture choices, while others led to similar prop choices; and that a small set of carefully chosen props can support multiple gestures. | en |

| dc.description.sponsorship | NSERC, Discovery Grant 2016-04422 || NSERC, Discovery Grant 2019-06589 || NSERC, Discovery Accelerator Grant 492970-2016 || NSERC, CREATE Saskatchewan-Waterloo Games User Research (SWaGUR) Grant 479724-2016 || Ontario Ministry of Colleges and Universities, Ontario Early Researcher Award ER15-11-184 | en |

| dc.language.iso | en | en |

| dc.publisher | ACM | en |

| dc.relation.ispartofseries | Proceedings of the ACM on Human Computer Interaction; | |

| dc.subject | human-computer interaction | en |

| dc.subject | similarity measures | en |

| dc.subject | immersive interaction | en |

| dc.subject | agreement score | en |

| dc.subject | elicitation technique | en |

| dc.subject | virtual reality | en |

| dc.subject | gestural input | en |

| dc.subject | 3D physical props | en |

| dc.subject | Research Subject Categories::TECHNOLOGY::Information technology::Computer science::Computer science | en |

| dc.title | User-Defined Gestures with Physical Props in Virtual Reality | en |

| dc.type | Article | en |

| dcterms.bibliographicCitation | Marco Moran-Ledesma, Oliver Schneider, and Mark Hancock. 2021. User-Defined Gestures with Physical Props in Virtual Reality. Proc. ACM Hum.-Comput. Interact. 5, ISS, Article 488 (November 2021), 23 pages. DOI:https://doi.org/10.1145/3486954 | en |

| uws.contributor.affiliation1 | Faculty of Engineering | en |

| uws.contributor.affiliation2 | Games Institute | en |

| uws.contributor.affiliation2 | Management Sciences | en |

| uws.contributor.affiliation2 | Systems Design Engineering | en |

| uws.typeOfResource | Text | en |

| uws.peerReviewStatus | Reviewed | en |

| uws.scholarLevel | Faculty | en |

| uws.scholarLevel | Graduate | en |